Sometimes we need to have background jobs that need to run periodically, such as a mail client checking for new e-mail every 5 minutes.

If you set up a Timer like this you may run into trouble:

Timer jobTimer = new Timer();

jobTimer.Interval = 60;

jobTimer.Elapsed += new ElapsedEventHandler(RunJob);

serviceTimer.Start();

void RunJob(object sender, ElapsedEventArgs e)

{

// do some work

}

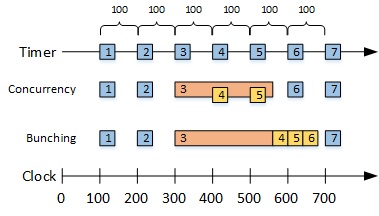

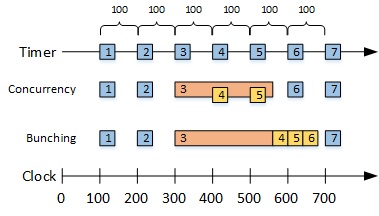

The problem is that there’s nothing to address what happens if the work takes longer than the interval. If it does, you’ll get some unplanned concurrency. This may not matter, depending on the work you are doing – but for this post, let’s assume that the concurrency is undesirable. I’ll also address another concern – controlling the thread on which the work is run.

Let’s say you try to resolve the unplanned concurrency with a lock:

object gate = new object();

void RunJob(object sender, ElapsedEventArgs e)

{

lock(gate)

{

// do some work

}

}

This will remove the concurrency, but will cause successive work to queue up on the lock. If you start to overrun regularly, the queue could just keep growing and you’ll get some unplanned bunching.

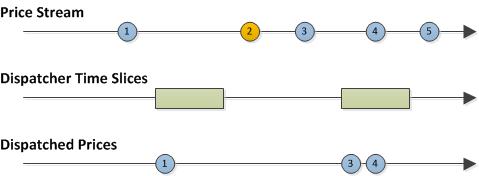

I’ve illustrated the situation before and after the lock is introduced below:

Now, there are a few ways to address this problem and most solutions fall into one of two groups. The first is to keep the periodic timer, but skip the work if a previous invocation is still running. You can do that like this:

object gate = new object();

void RunJob(object sender, ElapsedEventArgs e)

{

if (Monitor.TryEnter(gate)

{

try

{

// do some work

}

finally

{

Monitor.Exit(locker);

}

}

}

However, this leads to an unpredictable interval between invocations – sometimes it may just happen that the lock is released just as a new iteration tries to acquire it, other times the lock will be just missed and you’ll go almost double the interval between invocations.

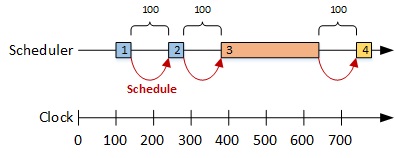

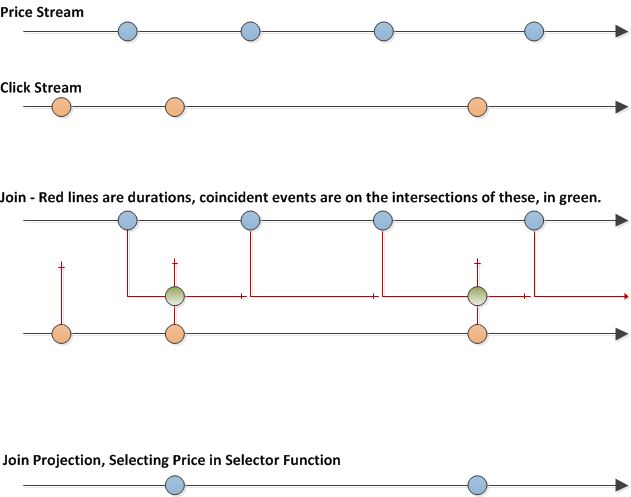

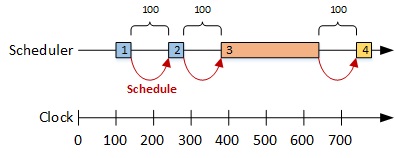

The second group of solutions elect not to use a timer that fires periodically. Instead, after each time we perform the work only then do we schedule the subsequent iteration to take place. That is, instead of taking the interval to be start of one iteration to the start of the next, we take it to be from the end of one iteration to the start of the next. To do this, since we don’t know how long the work will take, we have to wait until the work is finished before scheduling the next iteration. This guarantees a predictable interval without any work running.

The general idea looks like this:

If you stick with using one of the various Timer objects offered in the .NET BCL, then this is fairly easy to implement. You could use Timer.AutoReset = false and restart the Timer in the handler, for example.

However, we will look at an elegant solution offered by Rx. Here’s an extension method on an Rx Scheduler (more on this later) that will schedule a recurring task with the behaviour illustrated above:

public static IDisposable ScheduleRecurringAction(

this IScheduler scheduler, TimeSpan interval, Action action)

{

return scheduler.Schedule(interval, scheduleNext =>

{

action();

scheduleNext(interval);

});

}

And here’s how you could call it:

TimeSpan interval = TimeSpan.FromSeconds(5);

Action work = () => Console.WriteLine("Doing some work...");

var schedule =

Scheduler.Default.ScheduleRecurringAction(interval, work);

Console.WriteLine("Press return to stop.");

Console.ReadLine();

schedule.Dispose();

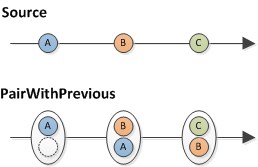

This code probably looks a little odd if you haven’t come across recursive scheduling in Rx before. The overload of Schedule we are using schedules work, but also provides an Action for scheduling the next piece of work after that. In the code above, this is the scheduleNext parameter.

Note it’s entirely optional whether you actually use this Action – not calling it will exit the recursive loop. What’s going on under the covers uses a technique called trampolining that avoids generating massive stacks. It’s an idea that’s beyond the scope of this blog post – but if you are feeling brave you can read more about it in this fascinating article by Bart de Smet!

The IDisposable that’s returned by the Schedule call can be used to cancel the scheduled work. Cleverly, this will work not just for the first item scheduled; it’s also good for the work subsequently scheduled recursively.

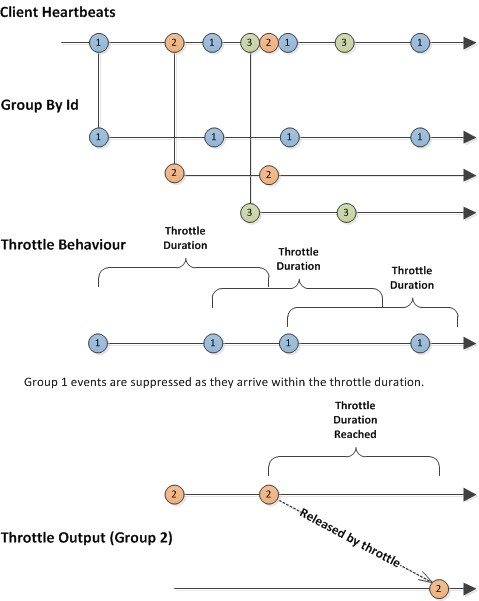

As previously mentioned, the code above is an extension method on IScheduler. Rx provides a number of IScheduler implementations. These are responsible for managing scheduled work, and in particular for deciding on what threads work should take place.

The Scheduler.Default property returns a platform specific (as of Rx 2.0) scheduler that introduces the “cheapest” form of concurrency. For .NET 4.5 this is the ThreadPoolScheduler – this means that each iteration will be run on a threadpool thread. Other schedulers include the NewThreadScheduler, TaskPoolScheduler, ImmediateScheduler, CurrentThreadScheduler and DispatcherScheduler. I’ll save a fuller discussion of these for another time.

If you were really paying attention, you’ll have noticed that while calling Dispose will cancel any scheduled actions not started and prevent any further actions from being scheduled, it won’t cancel any action already started. If you need to cleanly cancel a longer running piece of work, that might be problematic.

Next time we will look at a technique to cooperatively cancel long running actions, and abort any that run on too long.